Ollama vs LM Studio

What is Ollama and LM Studio#

Ollama and LM Studio are both for running local models on you personal computer. And when I say local models I mean like Deepseek or ChatGPT. And the best part is that information never leaves your computer, so your chats aren’t being sent to other companies like ChatGPT. That’s great if you want a model to look at your personal journal, your finances and just anything you don’t want companies to look at.

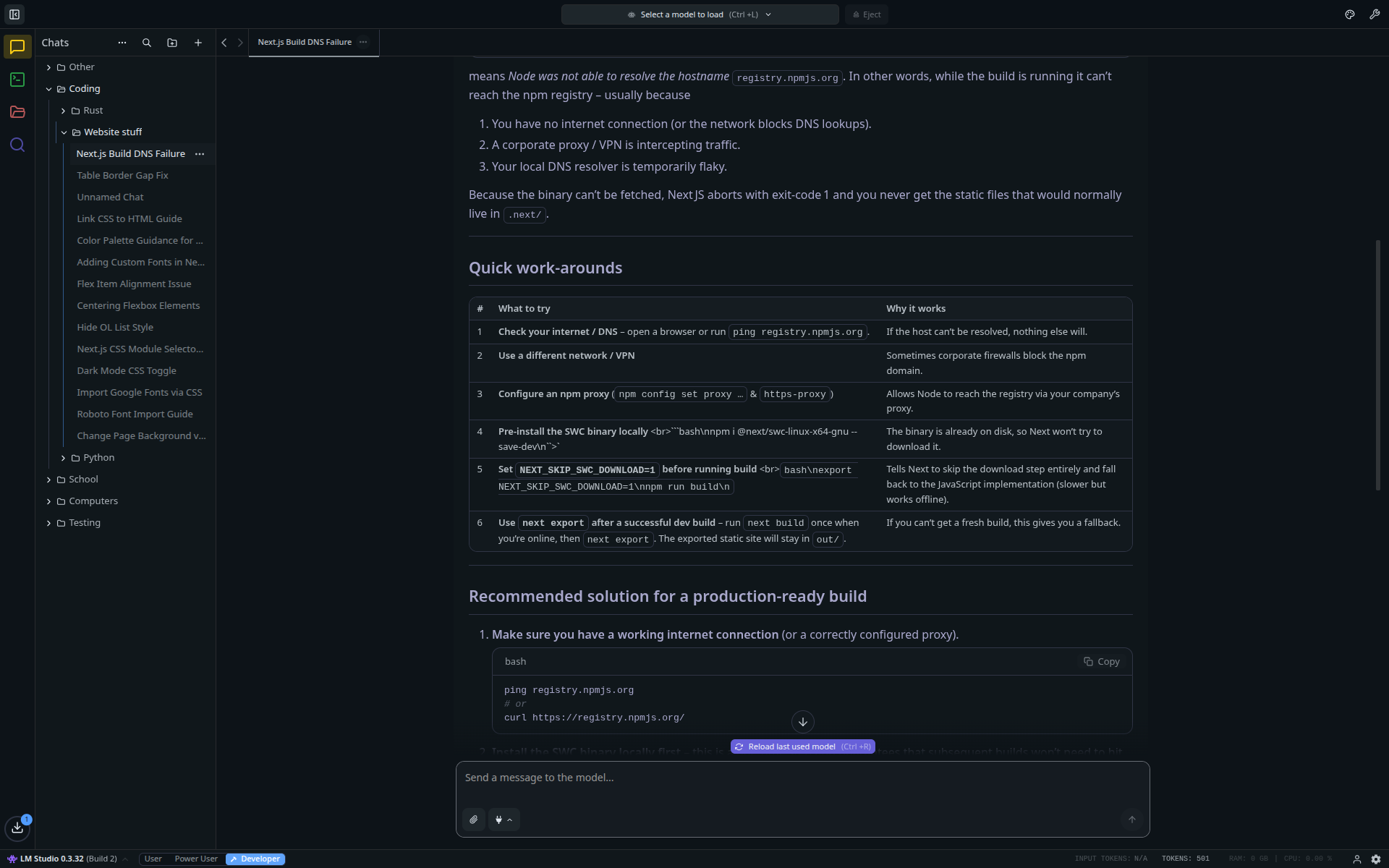

What is different about LM Studio?#

Here are some things that LM Studio has to offer that’s different from Ollama:

- It has a very user friendly GUI (graphical user interface).

- The GUI is very similar to ChatGPT’s GUI or just about any other LLM company.

- LM Studio has a wide range of models you can choose from.

- LM Studio also formats the markdown that the LLM produces.

- Has a API that you can host so that other’s can access your models.

I would suggest LM Studio if you aren’t a geek at using a computer or not that very technical, it’s a great place to start if you want to learn how to run LLMs locally.

I would suggest LM Studio if you aren’t a geek at using a computer or not that very technical, it’s a great place to start if you want to learn how to run LLMs locally.

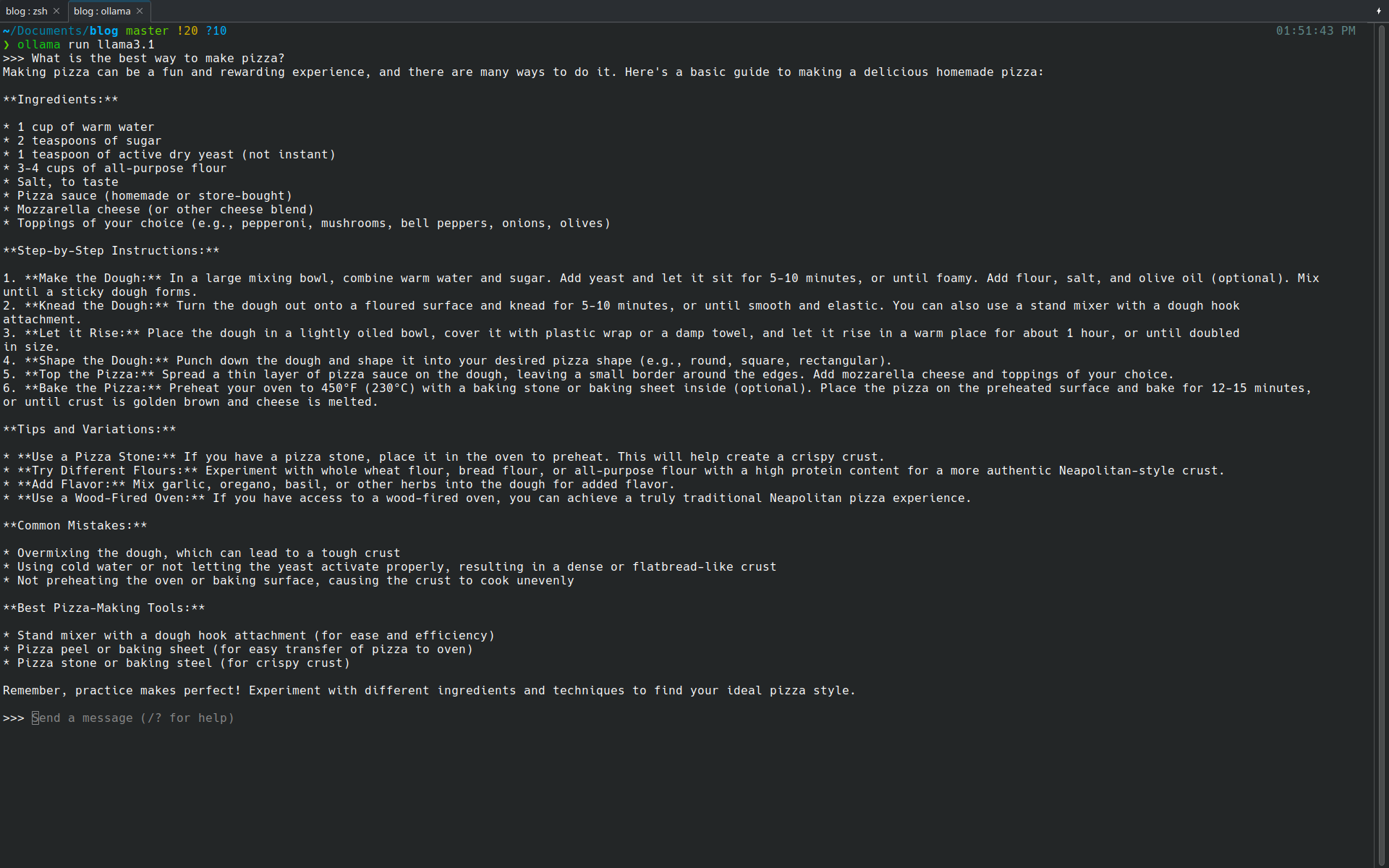

What is different about Ollama?#

Ollama is very similar to LM Studio with a few differences.

- It’s all in the CLI (Command line) or the terminal. So all those terminal people, you will like this one.

- It have very good API, unlike LM studio you can request multiple API calls at once.

- Very easy to integrate it’s API into apps you build

- Cloud models are in preview at this time of writing this. They say that they don’t store any data so that’s a big win for us. And it really does have a generous free tier.

- This is the most popular one between LM studio.

I would use Ollama if:

- You want integration with other apps, most AI apps have it so that you can connect Ollama to that app.

- Your working with API

- You like working in the terminal.

Which one is the best?#

I think Ollama wins because of it’s amazing API power that it has. Plus Ollama has cloud models, and they don’t store your data. And you can run powerful models like gpt-oss-120b for free. I use Ollama to because like I said has great API, I have it so that OpenWebUI connects to Ollama to run my models locally. If you haven’t tried Ollama or LM Studio, you should check them out, there great. That’s it from me peace.

Ollama models I use:

Links: